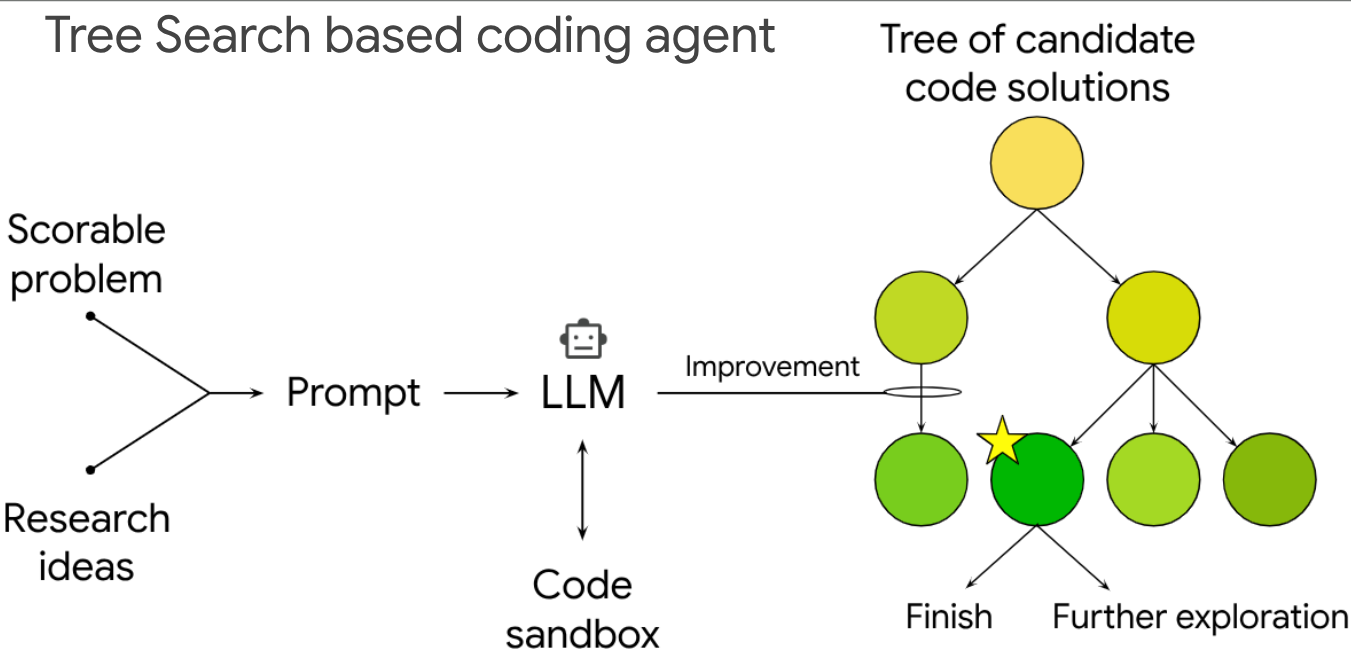

I am a Research Scientist at Google Research where I focus on developing LLM agents with specialized capabilities for scientific discovery and automated coding. My work centers on enabling models to reason over scientific data and complex codebases to significantly accelerate research workflows. Parallel to this, I lead research on evaluating video understanding to advance how multimodal models can make digital content more accessible to blind and low-vision users.

I am motivated by AI's potential to accelerate scientific breakthroughs and create assistive technologies that empower users. My contributions to healthcare and accessibility were featured in the documentary Healed through A.I. and recognized with a 2025 Bronze Cannes Lions award for Transformative Design.

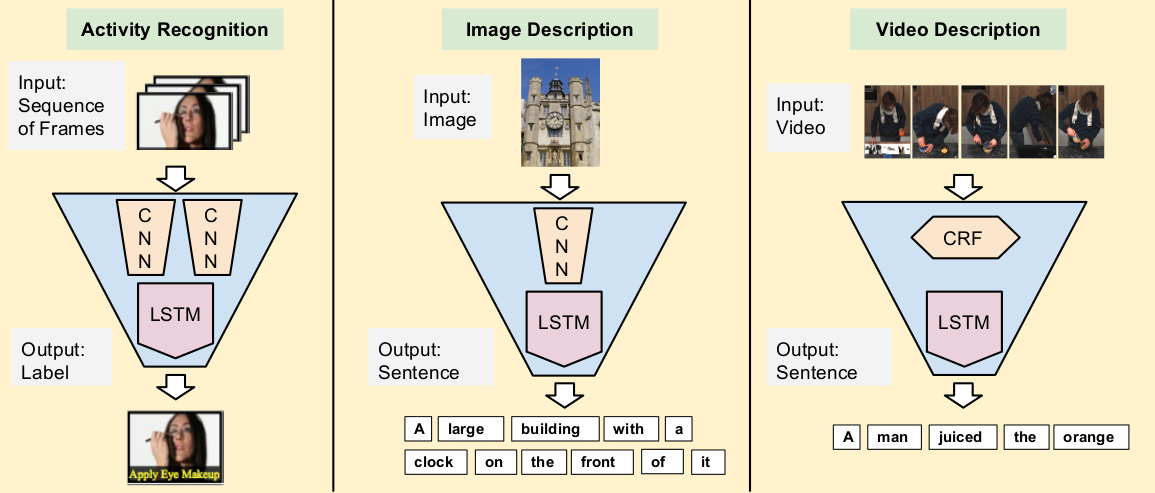

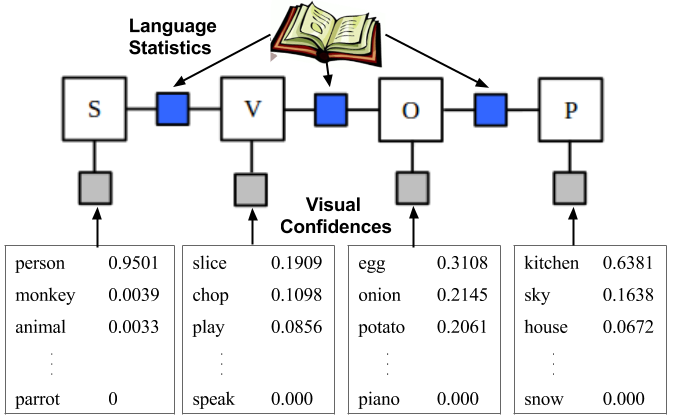

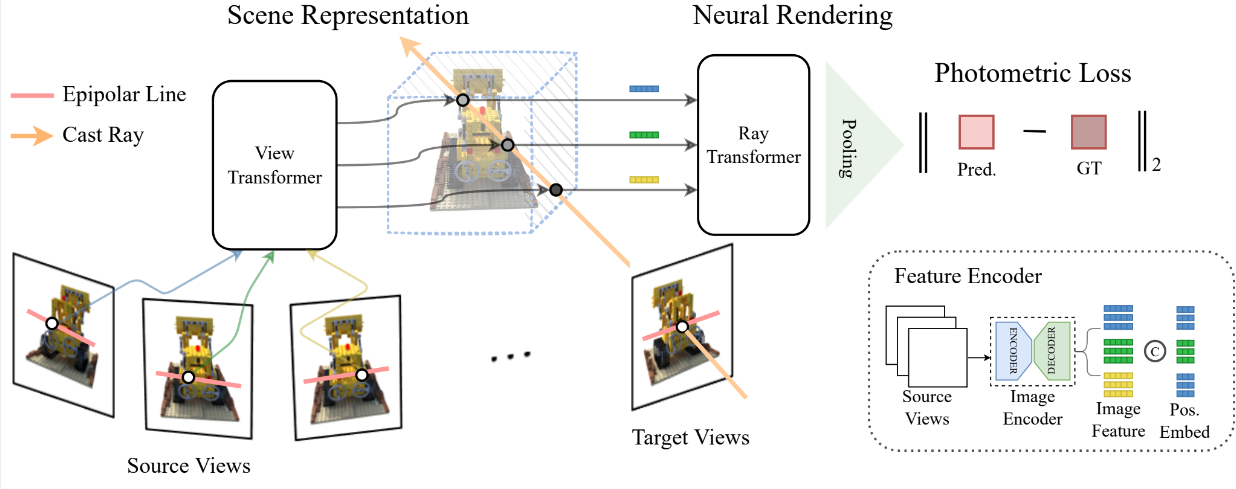

I earned my Ph.D. from UT Austin, advised by Ray Mooney and co-advised by Kate Saenko and Trevor Darrell. This collaborative environment, enriched by research stays at UC Berkeley and Google Brain internships, enabled my doctoral work to contribute foundational models for video captioning and sequence-to-sequence multimodal reasoning.

Research Focus

Agents & Science

Designing autonomous agents capable of scientific coding, parsing literature, and aiding in complex research reasoning.

Multimodal Learning

Developing models that integrate visual perception, speech, and language reasoning for video understanding.

AI for Accessibility

Creating assistive technologies to empower users with diverse vision, speech and motor capabilities through ML.

Selected Talks

Publications

CBGB The Cloud-Based Geospatial Benchmark: Challenges and LLM Evaluation

Jeffrey A. Cardille, Renee Johnston, Simon Ilyushchenko, Johan Kartiwa, Zahra Shamsi, Matthew Abraham, Khashayar Azad, Kainath Ahmed, Emma Bergeron Quick, Nuala Caughie, Noah Jencz, Karen Dyson, Andrea Puzzi Nicolau, Maria Fernanda Lopez-Ornelas, David Saah, Michael Brenner, Subhashini Venugopalan, Sameera S Ponda

Terrabytes @ ICML 2025, PMLR 2025

Using large language models to accelerate communication for eye gaze typing users with ALS

Shanqing Cai, Subhashini Venugopalan, Katie Seaver, Xiang Xiao, Katrin Tomanek, Sri Jalasutram, Meredith Ringel Morris, Shaun Kane, Ajit Narayanan, Robert L. MacDonald, Emily Kornman, Daniel Vance, Blair Casey, Steve M. Gleason, Philip Q. Nelson, Michael P. Brenner

Nature Comms 2024

SpeakFaster Observer: Long-Term Instrumentation of Eye-Gaze Typing for Measuring AAC Communication

Shanqing Cai, Subhashini Venugopalan , Katrin Tomanek, Shaun Kane, Meredith Ringel Morris, Richard Cave, Bob MacDonald, Jon Campbell, Blair Casey, Emily Kornman, Daniel Vance, Jay Beavers

CHI 2023

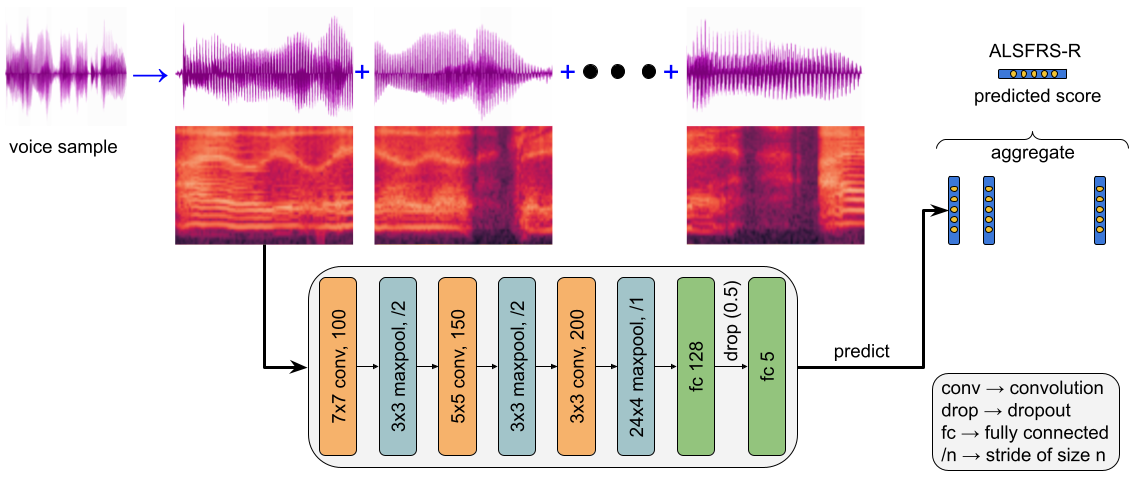

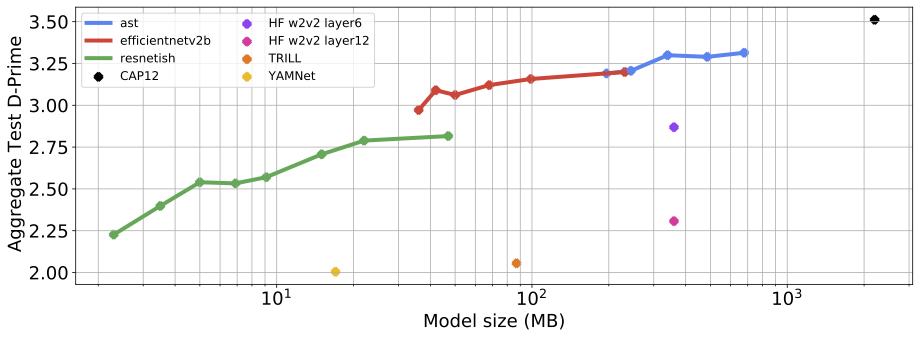

A machine-learning based objective measure for ALS disease severity

Fernando G. Vieira*, Subhashini Venugopalan *, Alan S. Premasiri, Maeve McNally, Aren Jansen, Kevin McCloskey, Michael P. Brenner, Steven Perrin

npj Digital Medicine 2022

Integrating deep learning and unbiased automated high-content screening to identify complex disease signatures in human fibroblasts.

Lauren Schiff, Bianca Migliori, Ye Chen, Deidre Carter, Caitlyn Bonilla, Jenna Hall, Minjie Fan, Edmund Tam, Sara Ahadi, Brodie Fischbacher, Anton Geraschenko, Christopher J. Hunter, Subhashini Venugopalan , and 30 others.

Nature Comms 2022

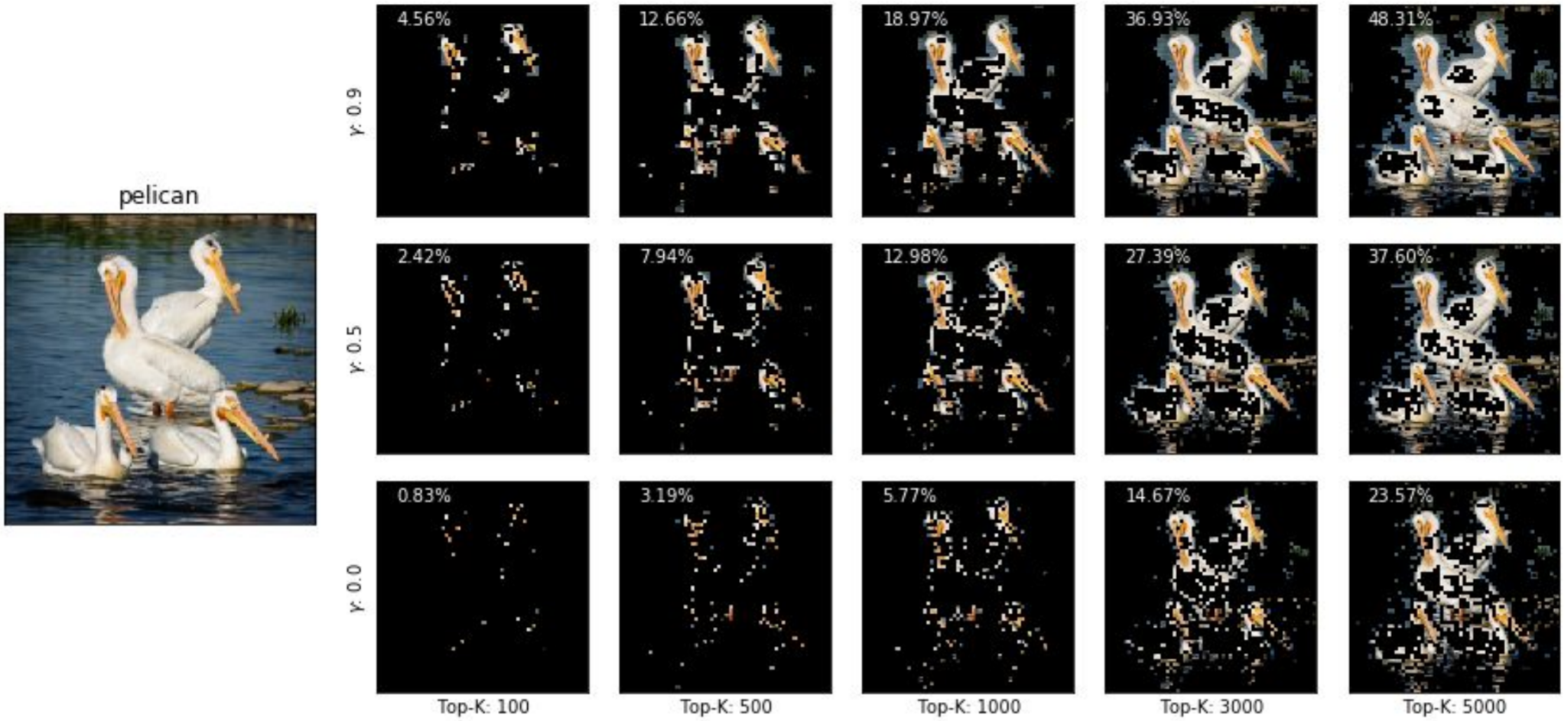

Guided Integrated Gradients: An Adaptive Path Method for Removing Noise

Andrei Kapishnikov, Subhashini Venugopalan , Besim Avci, Ben Wedin, Michael Terry, Tolga Bolukbasi

CVPR 2021

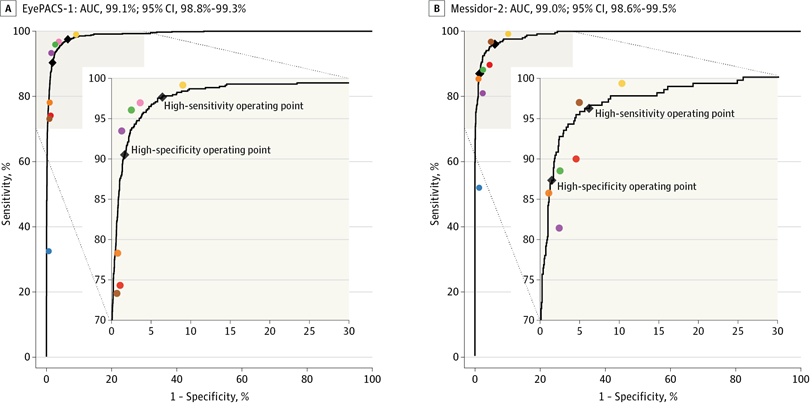

Predicting Risk of Developing Diabetic Retinopathy using Deep Learning

Ashish Bora, Siva Balasubramanian, Boris Babenko, Sunny Virmani, Subhashini Venugopalan , Akinori Mitani, Guilherme de Oliveira Marinho, Jorge Cuadros, Paisan Ruamviboonsuk, Greg S Corrado, Lily Peng, Dale R Webster, Avinash V Varadarajan, Naama Hammel, Yun Liu, Pinal Bavishi

THE LANCET, Digital Health 2021

Scientific Discovery by Generating Counterfactuals using Image Translation

Arunachalam Narayanaswamy*, Subhashini Venugopalan *, Dale R. Webster, Lily Peng, Greg Corrado, Paisan Ruamviboonsuk, Pinal Bavishi, Rory Sayres, Abigail Huang, Siva Balasubramanian, Michael Brenner, Philip Nelson, Avinash V. Varadarajan

MICCAI 2020

Predicting optical coherence tomography-derived diabetic macular edema grades from fundus photographs using deep learning

Avinash V Varadarajan, Pinal Bavishi, Paisan Ruamviboonsuk, Peranut Chotcomwongse, Subhashini Venugopalan , Arunachalam Narayanaswamy, Jorge Cuadros, Kuniyoshi Kanai, George Bresnick, Mongkol Tadarati, Sukhum Silpa-Archa, Jirawut Limwattanayingyong, Variya Nganthavee, Joseph R Ledsam, Pearse A Keane, Greg S Corrado, Lily Peng, Dale R Webster

Nature Comms 2020

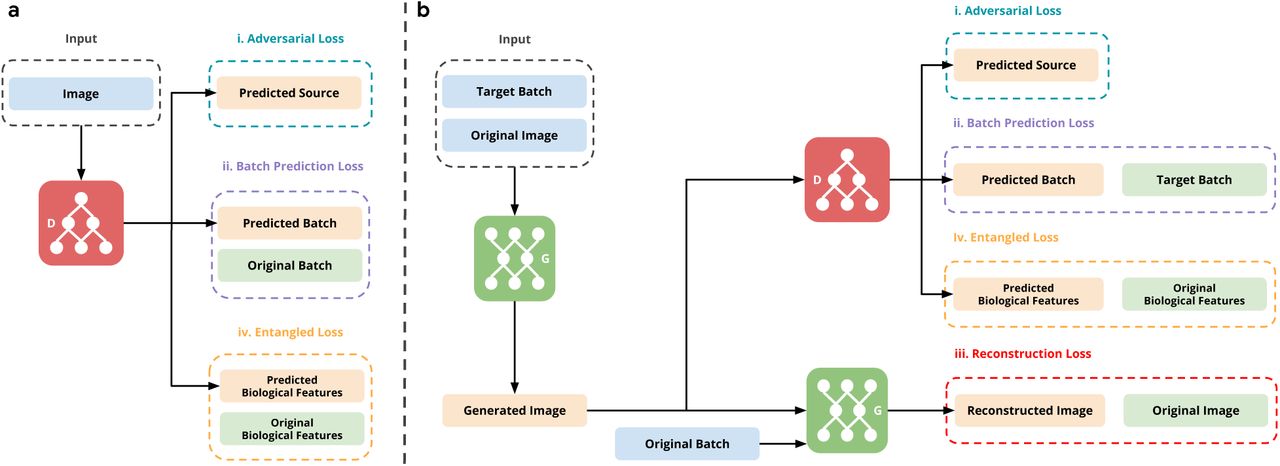

Batch Equalization with a Generative Adversarial Network

Wesley Wei Qian, Cassandra Xia, Subhashini Venugopalan , Arunachalam Narayanaswamy, Jian Peng, D Michael Ando

ECCB 2020

It's easy to fool yourself: Case studies on identifying bias and confounding in bio-medical datasets

Subhashini Venugopalan*, Arunachalam Narayanaswamy*, Samuel Yang*, Anton Gerashcenko, Scott Lipnick, Nina Makhortova, James Hawrot, Christine Marques, Joao Pereira, Michael Brenner, Lee Rubin, Brian Wainger, Marc Berndl

NeurIPS LMRL 2019

Applying Deep Neural Network Analysis to High-Content Image-Based Assays

Samuel J Yang*, Scott L Lipnick*, Nina R Makhortova*, Subhashini Venugopalan*, Minjie Fan*, Zan Armstrong, Thorsten M Schlaeger, Liyong Deng, Wendy K Chung, Liadan O' Callaghan, Anton Geraschenko, Dosh Whye, Marc Berndl, Jon Hazard, Brian Williams, Arunachalam Narayanaswamy, D Michael Ando, Philip Nelson, Lee L Rubin

SLAS DISCOVERY: Advancing Life Sciences R&D 2019

Semantic Text Summarization of Long Videos

Shagan Sah, Sourabh Kulhare, Allison Gray, Subhashini Venugopalan , Emily Prud'hommeaux, Raymond Ptucha

WACV 2017

Development and Validation of a Deep Learning Algorithm for Detection of Diabetic Retinopathy in Retinal Fundus Photographs

Varun Gulshan, Lily Peng, Marc Coram, Martin C Stumpe, Derek Wu, Arunachalam Narayanaswamy, Subhashini Venugopalan , Kasumi Widner, Tom Madams, Jorge Cuadros, Ramasamy Kim, Rajiv Raman, Philip C Nelson, Jessica L Mega, Dale R Webster

JAMA 2016